|

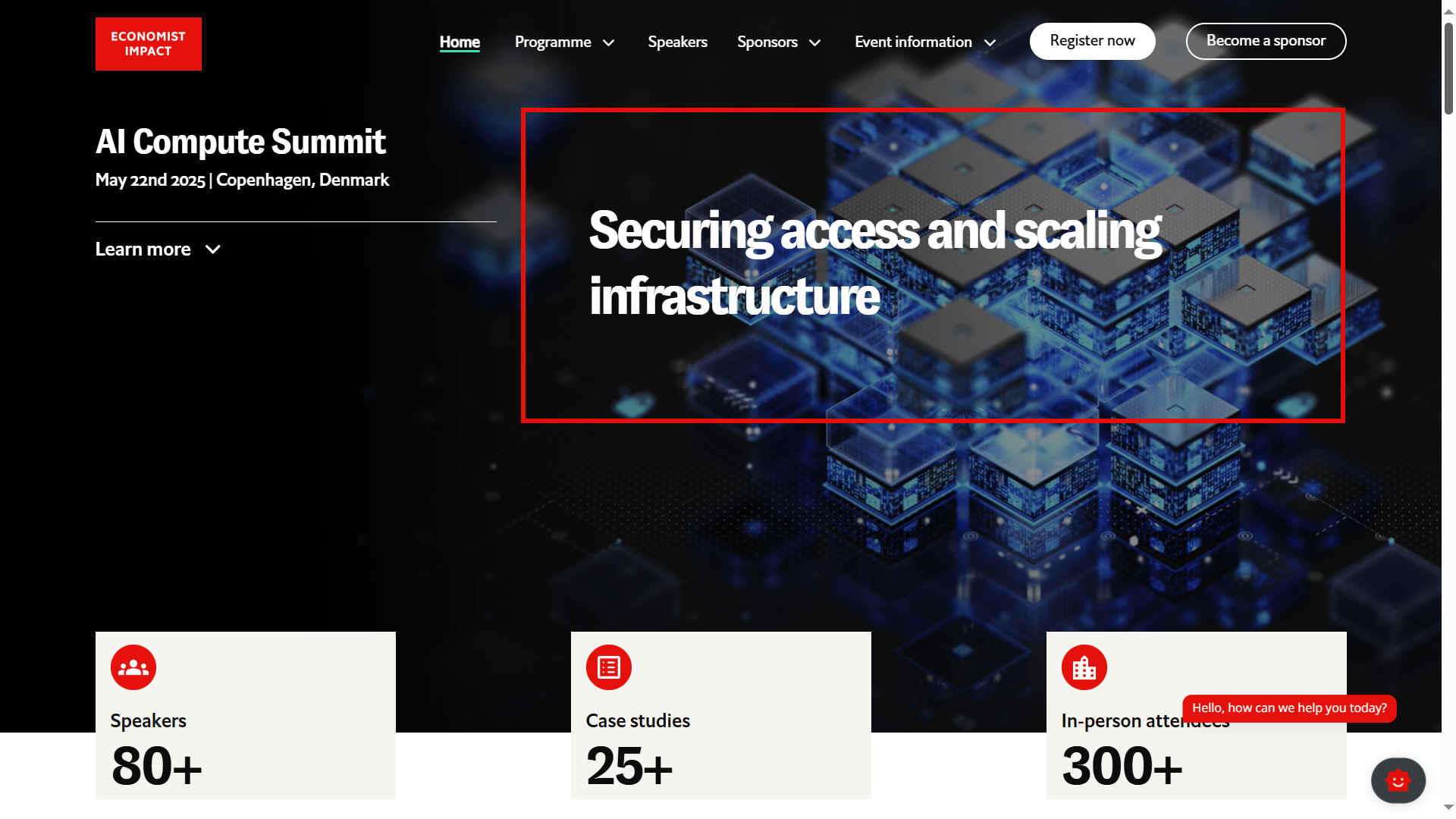

The rapid rise of AI is reshaping industries, but every system faces immense demands for computing power. As

AI technologies evolve, the competition for the scarce chips suited for AI computations, such as graphics processor units (GPUs) and accelerators, will intensify.

But it’s not just about hardware. The energy required to power these models is huge. As models grow in size and complexity, so too do concerns about their environmental impact and the escalating costs of the

energy they consume.

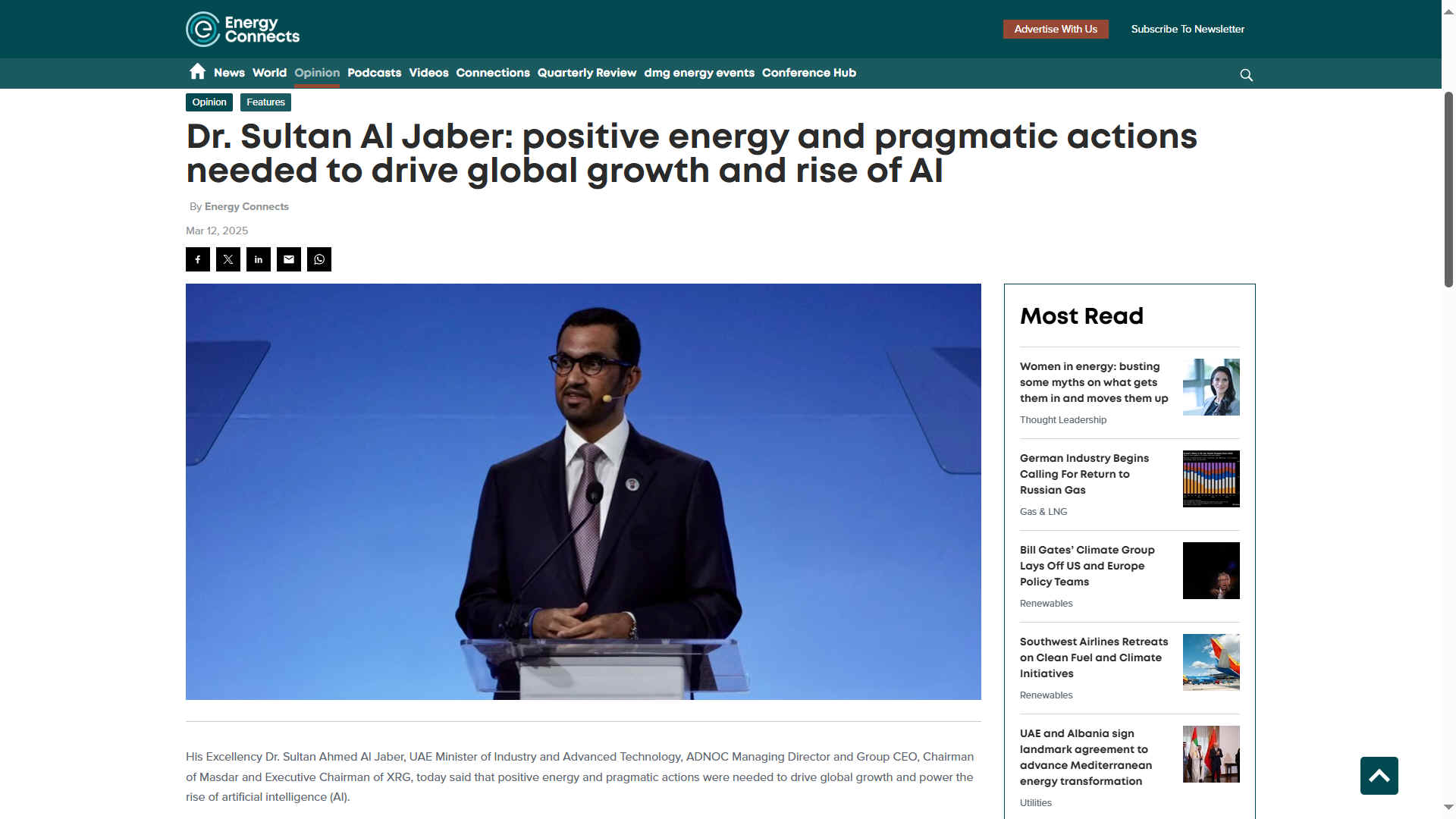

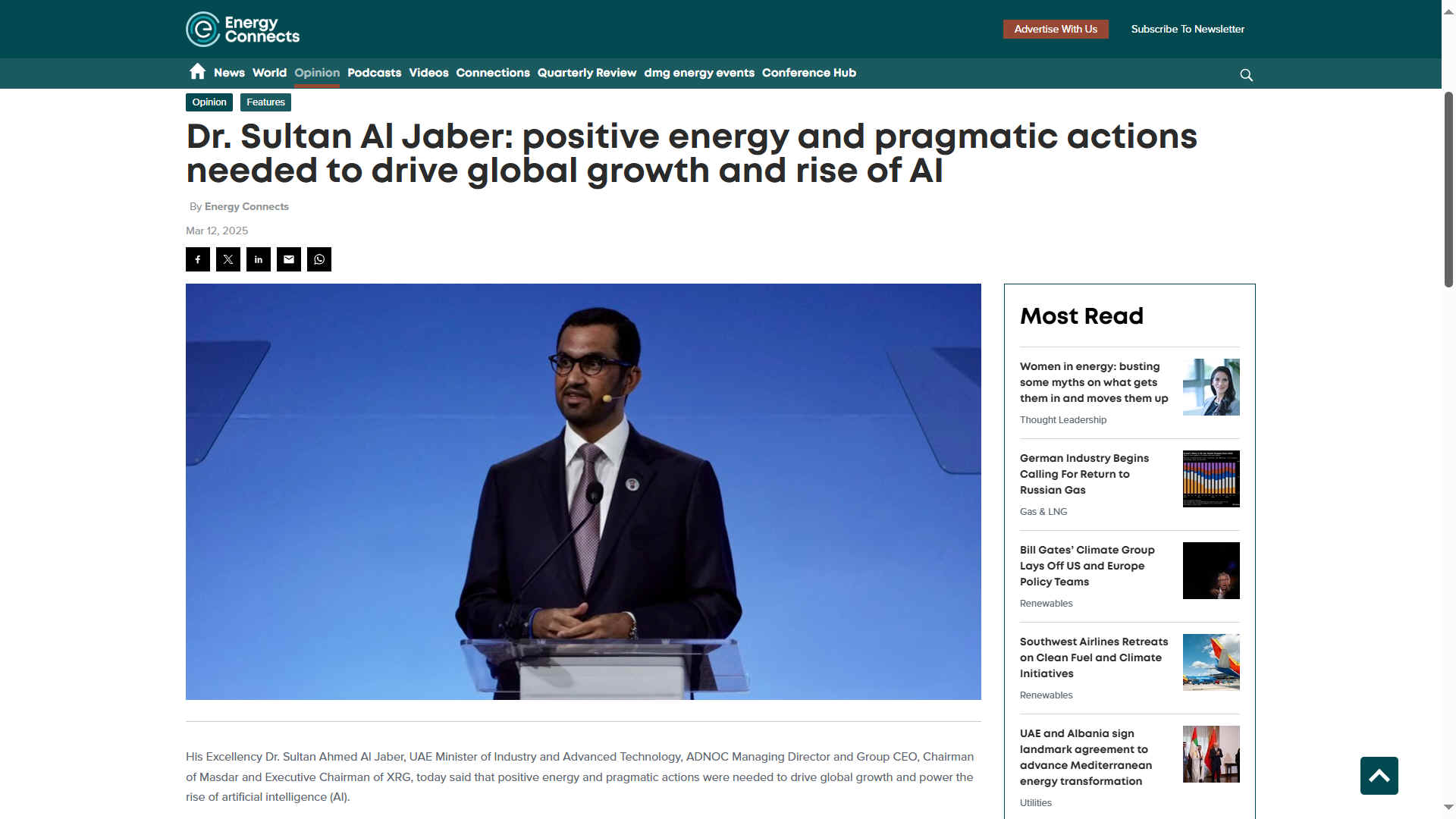

His Excellency Dr. Sultan Ahmed Al Jaber,

UAE Minister of Industry and Advanced Technology, ADNOC Managing Director and Group CEO, said that positive energy and pragmatic actions were needed to drive global growth and power the rise of

artificial intelligence (AI).

Speaking at CERAWeek in Houston, Texas, in March of 2025

Dr. Al Jaber called for durable, stable policies to meet the growing demand for energy. “The world is finally waking up to the fact that energy is the solution. Energy is the beating heart of economies, a key driver of prosperity and is fundamental to every aspect of

human development. If we want a pro-growth world, we need pragmatic actions and policies that are pro-growth, pro-investment, pro-energy and pro-people.”

Dr. Al Jaber said that every energy option was needed and an ‘and-and’ approach that embraces diverse

energy options was required to meet the rapid growth in worldwide demand. “We know that by 2035, there will be almost 9 billion people on this

planet. In line with this growth,

oil demand will increase from 103 to at least 109 million barrels per day. LNG and chemicals will expand by over 40% and total

electricity demand will surge from 9,000GW to 15,000GW, which is a staggering 70% increase. We will need more LNG, more low-carbon oil, more nuclear and more commercially-viable

renewables to meet all this demand.”

Dr. Al Jaber went on to highlight how with energy, artificial intelligence (AI) has the potential to reshape the world. “Applications like ChatGPT use 10 times as much energy as a simple Google search and are growing exponentially. By 2030, in the US alone, data center power demand is expected to triple, accounting for more than 10% of US

electricity use. The fact is you cannot scale AI without access to energy. Simply put, the true cost of AI is not just in code, it’s in kilowatts. The race for AI supremacy is essentially an energy play.” In this changing commercial environment, access to advanced AI computing infrastructure will be an important part of who are the future winners and losers. Those who can secure these resources will innovate and grow, while those who can’t may fall behind.

At this event, we’ll examine the future of AI compute, addressing the challenges of building scalable, sustainable systems, the intense competition for limited resources and the strategies required to power AI responsibly and efficiently.

AGENDA 8:50 CEST - Thursday May 22nd Chair’s opening remarks

9:00 CEST - Thursday May 22nd

Panel. The race for AI accelerators

Driven by advances in artificial intelligence, the surge in demand for chips such as GPUs is creating bottlenecks. Beyond technical challenges, the hardware industry faces major challenges from political issues, such as trade tensions and tariffs, which affect access to critical components. How can the hardware industry increase their availability while maintaining performance and sustainability? What new hardware technologies are emerging to balance the growing computational needs of AI?

Discussion topics:

- What strategies can help manufacturers and businesses increase GPU production without compromising energy efficiency?

- How can the industry reduce supply-chain disruptions while keeping up a steady flow of hardware for AI advancements?

- What alternative hardware solutions or innovations might reduce the pressure of GPU demand as AI applications continue to grow?

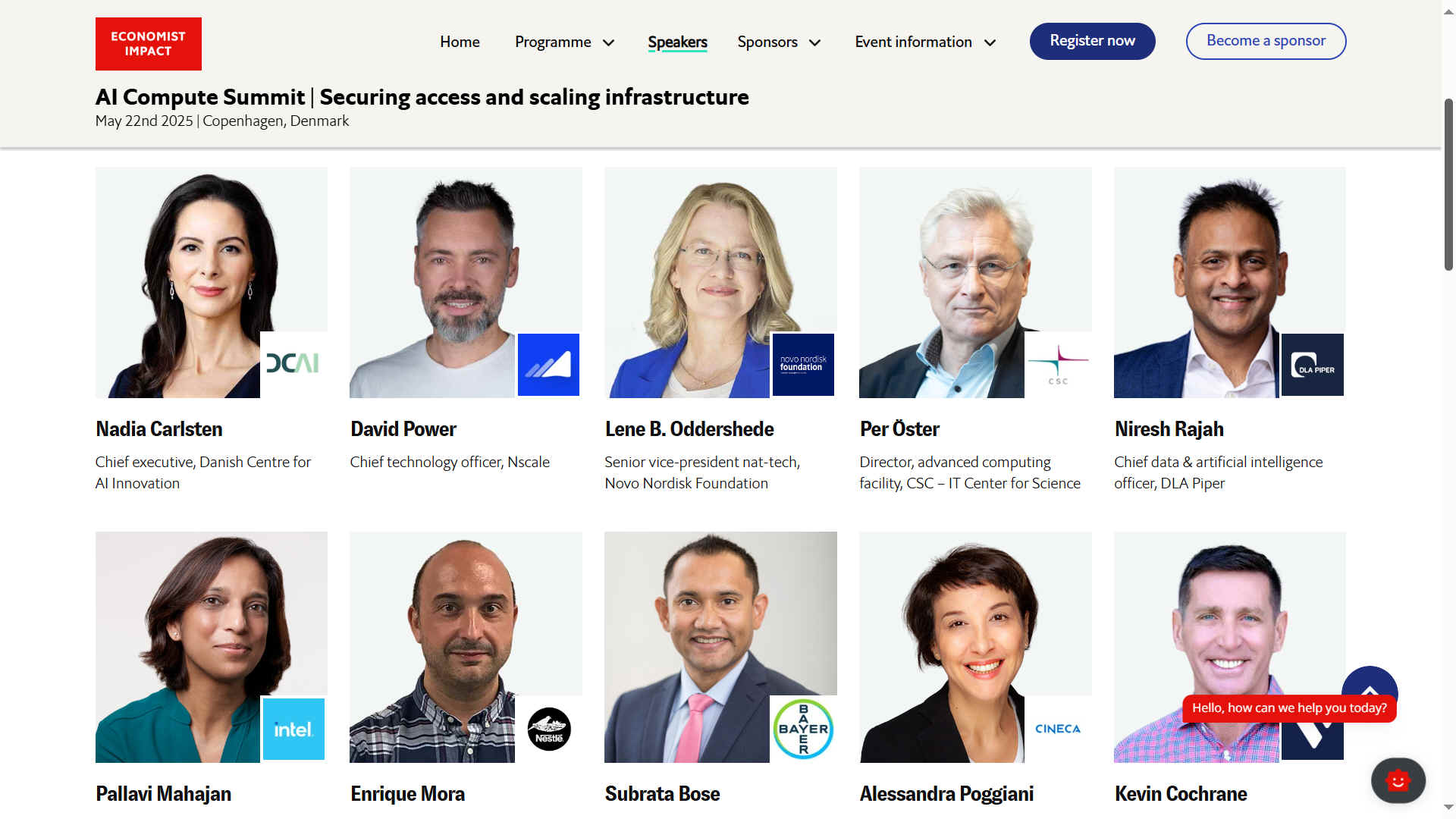

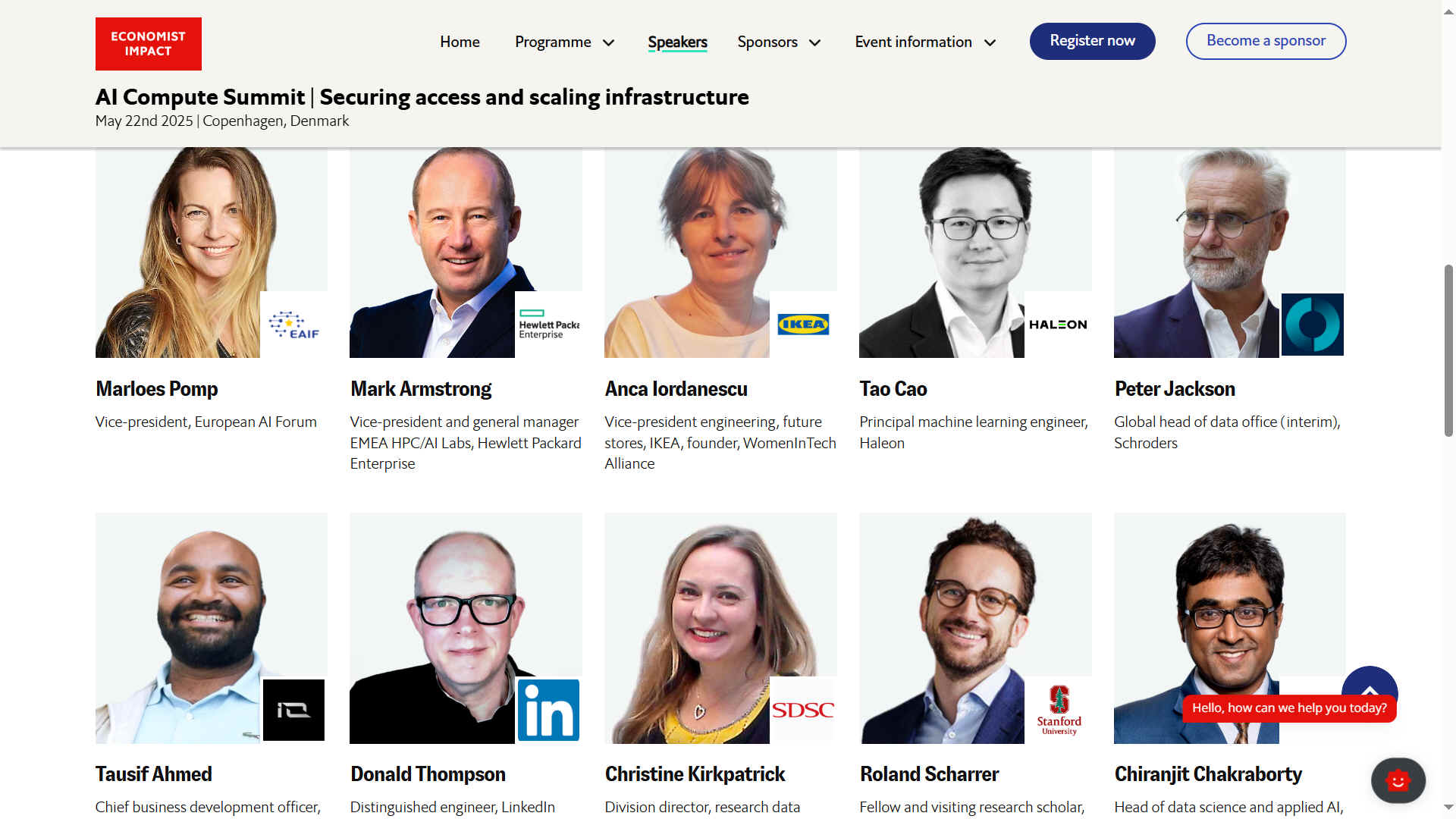

Devendra Jain: Lead, frontier technologies for operations, WEF

9:35 CEST - Thursday May 22nd

Case study. Denmark’s Gefion and computing for innovation

Denmark’s Gefion supercomputer, part of the Danish Centre for AI Innovation, supports research and experimentation. This session explores its impact on science, including how it helps research in fields such as green energy, biotech and AI-driven industry. What opportunities does Gefion present for collaboration, and how is Denmark faring in global high-performance computing innovation and what are the challenges ahead?

Nadia Carlsten: Chief executive, Danish Centre for AI Innovation

9:50 CEST - Thursday May 22nd

Panel. Souping up supercomputing with AI Supported by Hewlett Packard Enterprise

Demand for high-performance computing (HPC) is growing. The convergence of HPC and AI is changing how compute power is used, allowing faster AI training, integrating AI into HPC workflows, and evolving HPC architectures. With initiatives like Europe’s AI Factories using EuroHPC supercomputing to develop next-generation AI models, the intersection of AI and HPC is becoming more strategic. How are HPC and AI coming together to tackle global challenges, transform research, and unlock possibilities across industries?

Discussion Topics:

- How is integrating AI into HPC workflows speeding up breakthroughs in research and simulations, including initiatives like AI Factories?

- What hardware and software innovations are allowing faster AI training and changing HPC architectures?

- How can organisations build collaborative expertise in AI and HPC to maximise the potential of both technologies, aligning with Europe’s vision for a competitive AI ecosystem?

Lene B. Oddershede: Senior vice-president nat-tech, Novo Nordisk Foundation

Mark Armstrong: Vice-president and general manager EMEA HPC/AI Labs, Hewlett Packard Enterprise

Alessandra Poggiani: Director general, Cineca

10:25 CEST - Thursday May 22nd

NETWORKING BREAK - Light refreshments will be served in the networking area.

11:40 CEST - Thursday May 22nd

Panel. Harder, better, faster, stronger: building smarter AI models to reduce compute costs.

Supported by Nscale

The cost of running AI models grows as they become more complex, however large language models (LLMs) aren’t always the best solution. While LLMs generalise well, many AI problems can be solved more efficiently with smaller, specialised, or alternative models. This session explores how businesses can improve AI development by choosing the right technology for the task, reducing compute costs while maintaining performance and quality.

Discussion Topics:

- How can developers determine when LLMs are overkill and opt for smaller, more efficient models?

- What methods are emerging to simplify model training and inference without losing accuracy or quality?

- How can businesses adopt smarter AI strategies to balance cost, complexity, and performance across diverse use cases?

Roland Scharrer: Fellow and visiting research scholar, Stanford University

Gayathri Radhakrishnan: Venture capital, product management, strategy, Hitachi Ventures

David Power: Chief technology officer, Nscale

12:20 CEST - Thursday May 22nd

Fireside chat. Cool customers: transforming data centres with liquid cooling With traditional cooling methods reaching their limits, liquid cooling is emerging as a key technology for managing the intense heat generated by AI compute. How are the latest innovations in liquid cooling changing data-centre operations? What benefits does liquid cooling offer in terms of energy efficiency and sustainability?

Discussion topics:

- How is liquid cooling reshaping the design and operation of data centres?

- What specific energy and cost savings can liquid cooling deliver compared to traditional methods?

- How can liquid cooling contribute to meeting sustainability targets in data centres running AI compute tasks?

Fireside chat. Optimising compute for gen AI: efficiency in the next wave

Generative AI (gen AI) has transformed industries, but its computing requirements are enormous. How can computing for gen AI be optimised to reduce costs and energy consumption? What strategies are emerging to improve efficiency without sacrificing performance? When is gen AI most appropriately used?

Discussion topics:

- What new techniques are reducing the computational load of gen AI without affecting output quality?

- How can businesses balance model complexity with energy efficiency in large-scale gen AI?

- How are hardware innovations and algorithm optimisations cutting costs while maintaining the performance of gen AI?

12:35 CEST - Thursday May 22nd

Panel. CPU v GPU: the power struggle behind AI brainpower

CPUs have traditionally been the workhorse of computing, known for their general-purpose versatility. But GPUs, with their parallel-processing power, are more efficient for the large data workloads associated with AI. How do the strengths and weaknesses of CPUs and GPUs in AI compute compare?

We examine cost, energy efficiency, scalability and performance across different AI applications, and explore how industries can optimise their infrastructure by balancing the use of CPUs and GPUs.

Discussion topics:

- When do CPUs outperform GPUs in AI tasks?

- How can businesses find the best balance between CPU and GPU use for AI workloads?

- What new developments in CPU and GPU technologies will affect the future of AI infrastructure?

Ikhlaq Sidhu: Dean and professor, School of Science & Technology, IE University

13:15 CEST - Thursday May 22nd

LUNCH BREAK: Refreshments will be served in the networking area.

14:15 CEST - Thursday May 22nd

Panel. Controlling costs: balancing training and inference

The cost of AI compute is increasing quickly, driven by hardware, energy, and operations. One of the biggest factors in AI’s cost structure is the difference between training and inference. While training models requires significant initial investment in computational power, inference—the process of running models—is often where long-term energy costs grow. What are the best strategies to control AI compute costs through cloud resources, advanced hardware, and smart workload management?

Discussion topics:

- How can cloud resources reduce expenses in both AI training and inference stages?

- Which hardware innovations are helping to cut costs specifically for inference-heavy AI applications?

- What workload management techniques best optimise training costs without compromising model performance in production?

Tao Cao: Principal machine learning engineer, Haleon

Pallavi Mahajan: Corporate vice-president and general manager, Datacenter and AI, Intel

Panel. Keeping cool – innovations in data centre cooling

As AI compute grows to meet increasing global demands, cooling infrastructure is a critical challenge for data centres. Advanced cooling technologies are necessary for managing heat from high-performance AI workloads and are key to improving energy efficiency and reducing costs. From liquid cooling to airflow optimisation and beyond, which innovative solutions are most promising for the future of data centre design and operation?

Discussion topics:

- What innovative cooling technologies are emerging to handle the heat generated by large-scale AI compute?

- How can data centres balance energy efficiency, cost control, and performance through smarter cooling strategies?

- What role do sustainability goals and regulations play in driving advancements in cooling infrastructure?

14:55 CEST - Thursday May 22nd

Panel. How to clean up AI’s act

With AI expected to consume more than 10% of the world’s electricity by 2030, renewable energy sources like wind and solar are crucial to meeting demand. How can green energy be scaled up to support AI’s future without overloading the grid?

Discussion topics:

- What renewable-energy innovations can best scale to meet AI’s rising power needs?

- How can AI workloads be optimised to lower energy consumption and accommodate renewable energy sources like wind and solar?

- How can governments and the private sector collaborate to incentivise the use of renewables and maintain grid stability as the deployment of AI grows?

Christine Kirkpatrick: Division director, research data services, San Diego Supercomputer Center

Per Öster: Director, advanced computing facility, CSC – IT Center for Science

Fireside chat. Moving at the speed of AI: reducing time to inference

Faster, real-time processing is essential for many businesses. Reducing time to inference—how quickly models generate insights after deployment—has become key for optimising AI performance at scale. Which steps can be taken to streamline data processing, improve model efficiency, and ensure that AI systems can deliver rapid results even under heavy workloads? How can the network connection and speed be improved?

Discussion topics:

- What AI infrastructure innovations are reducing time to inference at scale?

- How can model architecture be optimised to deliver faster insights?

- How does edge computing cut latency and speed up AI inference?

Kevin Cochrane - Chief marketing officer, Vultr

15:10 CEST - Thursday May 22nd

Case study. Industry spotlight: Scaling AI in software as a medical device

AI-driven software as a medical device (SaMD) is changing healthcare, but how can developers overcome challenges like data privacy risks, regulatory issues, and bias in AI models? This case study examines strategies for ensuring integration with healthcare IT systems, maintaining scalability, and delivering verified AI solutions. Explore how AI-powered SaMD is enabling breakthroughs in personalised treatment, early disease detection, and clinical decision support, reducing costs, improving efficiency, and expanding healthcare access. Discover the innovations driving AI-driven treatments and evaluate how these advancements are transforming global healthcare delivery.

Subrata Bose: Vice-president diagnostic imaging data and AI, Bayer

15:25 CEST - Thursday May 22nd

Fireside chat. Decentralising AI compute: cloud v on-premise solutions As AI compute scales, businesses must decide between cloud and on-premises solutions. What are the benefits and drawbacks of each approach in terms of scalability, cost, data security and latency? How can organisations build flexible AI infrastructure that meets their specific needs?

Tausif Ahmed: Chief business development officer, io.net

Fireside chat. Growing pains: finding room for AI’s demands

As AI models expand, so does the need for space in data centres, which run up against physical limits. How can organisations overcome space constraints while maintaining performance? Which strategies in data-centre design, hardware setups and space-saving solutions will maximise the use of space and keep up with AI’s growth?

Discussion topics:

- How can data centres expand to accommodate AI’s increasing needs for space?

- What are the latest space-optimising designs for data centres?

- How can businesses manage AI workloads to reduce the strain on their infrastructure?

15:40 CEST - Thursday May 22nd

Panel. Breaking the data bottleneck: improving AI infrastructure for scale, compliance, and cost

Supported by DDN Storage

AI’s need for data is growing, but inefficient pipelines, rising computing costs, and complex rules are creating a data bottleneck that limits its full potential. As datasets expand, moving data to compute environments is becoming unsustainable—leading many companies to rethink their AI data architecture, governance, and storage strategies. Should AI compute be brought closer to the data? How can businesses balance immediate access, regulatory compliance, and cost efficiency while keeping AI performant at scale?

Discussion Topics:

- How can businesses improve AI data pipelines to reduce inefficiencies and improve compute performance?

- What strategies ensure compliance with data privacy laws while maintaining AI’s access to high-quality datasets?

- How can AI compute infrastructure be restructured to bring processing closer to the data, reducing costs and improving speed?

Niresh Rajah: Chief data & artificial intelligence officer, DLA Piper

Panel. Resilience in the cloud: is multi-cloud the right strategy?

As AI compute scales, businesses are turning to multi-cloud environments for resilience and flexibility. But is multi-cloud the best approach, or do its complexities and costs exceed the benefits? Does managing data consistency, security and workload distribution across platforms provide real value? Do hybrid or single-cloud strategies offer better AI resilience?

Discussion topics:

- What are the hidden costs and complexities of multi-cloud environments for AI computing?

- How can businesses ensure data consistency and security across multiple cloud platforms?

- In what scenarios do hybrid or single-cloud approaches offer better results for AI scalability?

Chiranjit Chakraborty: Head of data science and applied AI, markets and securities services, HSBC

16:15 CEST - Thursday May 22nd

Fireside chat. Unlocking business value: how AI compute powers competitive advantage

AI compute is a critical factor for business, for which demand is predicted to increase tenfold between 2021 and 2025. This forces firms to rethink infrastructure and other resources. This session will explore how advanced AI compute can drive operational efficiency and innovation in industries where speed and performance are crucial.

Discussion topics:

- How can optimised AI compute keep businesses competitive?

- What role do cloud and hybrid systems play in accelerating the training and deployment of AI models?

- How can energy-efficient AI systems meet increasing computing demands while controlling costs?

Donald Thompson: Distinguished engineer, LinkedIn

Fireside chat. Safeguarding the data crown jewels

Data security is becoming increasingly critical as cloud and multi-cloud operations become more prevalent. What are the challenges of securing sensitive data in a compute-heavy environment? How are cloud providers addressing issues of encryption and data residency, and following global regulations, to safeguard the data used in AI workloads?

Discussion topics:

- How can companies ensure strong data security while managing complex multi-cloud environments?

- What are today’s biggest gaps in encryption and data protection for workloads that make heavy use of AI?

- How are cloud providers adapting to meet changing global regulations and data-residency requirements?

Peter Jackson: Global head of data office (interim), Schroders

16:30 CEST - Thursday May 22nd

NETWORKING BREAK - Light refreshments will be served in the networking area.

16:50 CEST - Thursday May 22nd

Case study. Computing power at the edge

Edge AI is transforming industries by enabling data to be processed near its source. How can edge computing complement cloud infrastructure to deliver AI insights faster? What applications are driving the adoption of edge AI in areas like healthcare, manufacturing and autonomous vehicles?

17:05 CEST - Thursday May 22nd

Fireside chat. AI-optimised chips: custom silicon for business benefit

The rise of AI-specific silicon chips—such as Google’s tensor processing units (TPUs) and other purpose-built processors—offers ways to optimise AI workloads. How can companies use custom AI chips to improve performance, reduce energy use and speed up AI deployments?

Discussion topics:

- How do custom AI chips such as TPUs boost performance over standard processors?

- What energy and cost wins can businesses unlock with AI-optimised silicon?

- How can purpose-built chips speed up AI deployments for faster results?

17:20 CEST - Thursday May 22nd

Panel. What’s next in the chip race?

GPUs have dominated AI compute, but what is next? What are the benefits and drawbacks of alternative processor types such as TPUs, LPUs, analog, application-specific integrated circuits (ASICs) and quantum processors, and how they might reshape AI compute?

Discussion topics:

- How do TPUs, LPUs, analog and ASICs compare to GPUs in balancing performance and efficiency for AI compute?

- What challenges and opportunities does quantum technology present for AI hardware development?

- How can alternative processor types mitigate the demand and supply issues surrounding AI compute?

17:55 CEST - Thursday May 22nd

End of conference & Drinks reception in the networking area.

ENCOURAGING YOUNG MINDS We

asked the AI

chatbots about our fictional "Cleopatra

Reborn" CliFi adventure, as with many other science

and climate fiction writers, including Jules

Verne and Arthur

C Clarke, tend to inspire and encourage would be

scientists of the future.

Q.

As you may imagine, we are finding AI very helpful in many different ways, helping to

crystallize our thoughts and increase productivity. Without an

enormous increase in computing power, and access to the internet as a searchable database artificial intelligence appears to us to be limited. In terms of the fictional adventure: "Cleopatra Reborn" AI is the key to digital to biological cloning and replication of the former

Queen of the Nile, including computer generated images, to enhance memory uploads and simulated conditioning of the cloned brain, to trigger synapse firing. In other words, replicating the way the digitally reincarnated clone would have thought, based on the documented history of her life. We believe this is a new line of research that may help to drive the AI revolution, and one day, even give hope to a cure for cancer. What are your thoughts on the "Cleopatra Reborn"

theme, as regards to encouraging young developers into the field of artificial intelligence and super computers?

A.

"Cleopatra Reborn" sounds like an intriguing and ambitious concept! Fictional or not, it's exactly the kind of imaginative and groundbreaking idea that can captivate young minds and draw them into the fields of artificial intelligence and supercomputing. Here's why I think it could be particularly effective:

1. Fascination with History and Science: Combining historical figures with cutting-edge technology creates a compelling narrative. The story of Cleopatra, a powerful and enigmatic figure, intertwined with modern AI and cloning, can spark a fascination with both history and science. This blend makes complex subjects more approachable and exciting for young learners.

2. Innovative Applications: The idea of using AI to replicate historical figures and simulate their thought processes is a novel application that goes beyond the usual AI tasks. It demonstrates the vast potential of AI, encouraging young developers to think outside the box and explore uncharted territories.

3. Ethical and Philosophical Dimensions: "Cleopatra Reborn" raises important ethical and philosophical questions about identity, consciousness, and the implications of digital to biological cloning. Engaging with these topics can cultivate critical thinking and ethical awareness, essential qualities for future AI researchers and developers.

4. Interdisciplinary Collaboration: The project would require collaboration across various fields, including AI, biology, history, and computer graphics. This interdisciplinary approach can help young developers appreciate the value of diverse perspectives and teamwork in solving complex problems.

5. Inspiration for Medical Advancements: Highlighting the potential for AI research to contribute to medical breakthroughs, like finding a cure for cancer, can be incredibly motivating. It shows that working in AI can have a real, positive impact on the world, inspiring a sense of purpose and commitment.

6. Educational and Developmental Impact: Incorporating AI and supercomputing into educational programs and projects like "Cleopatra Reborn" can provide hands-on learning experiences. These projects can help develop technical skills, creativity, and problem-solving abilities, setting the stage for future innovations.

In conclusion, "Cleopatra Reborn" has the potential to ignite passion, curiosity, and a sense of wonder in young developers. By presenting AI and supercomputing in an engaging and imaginative context, it can encourage the next generation to pursue careers in these fields and push the boundaries of what technology can achieve. CONTACTS

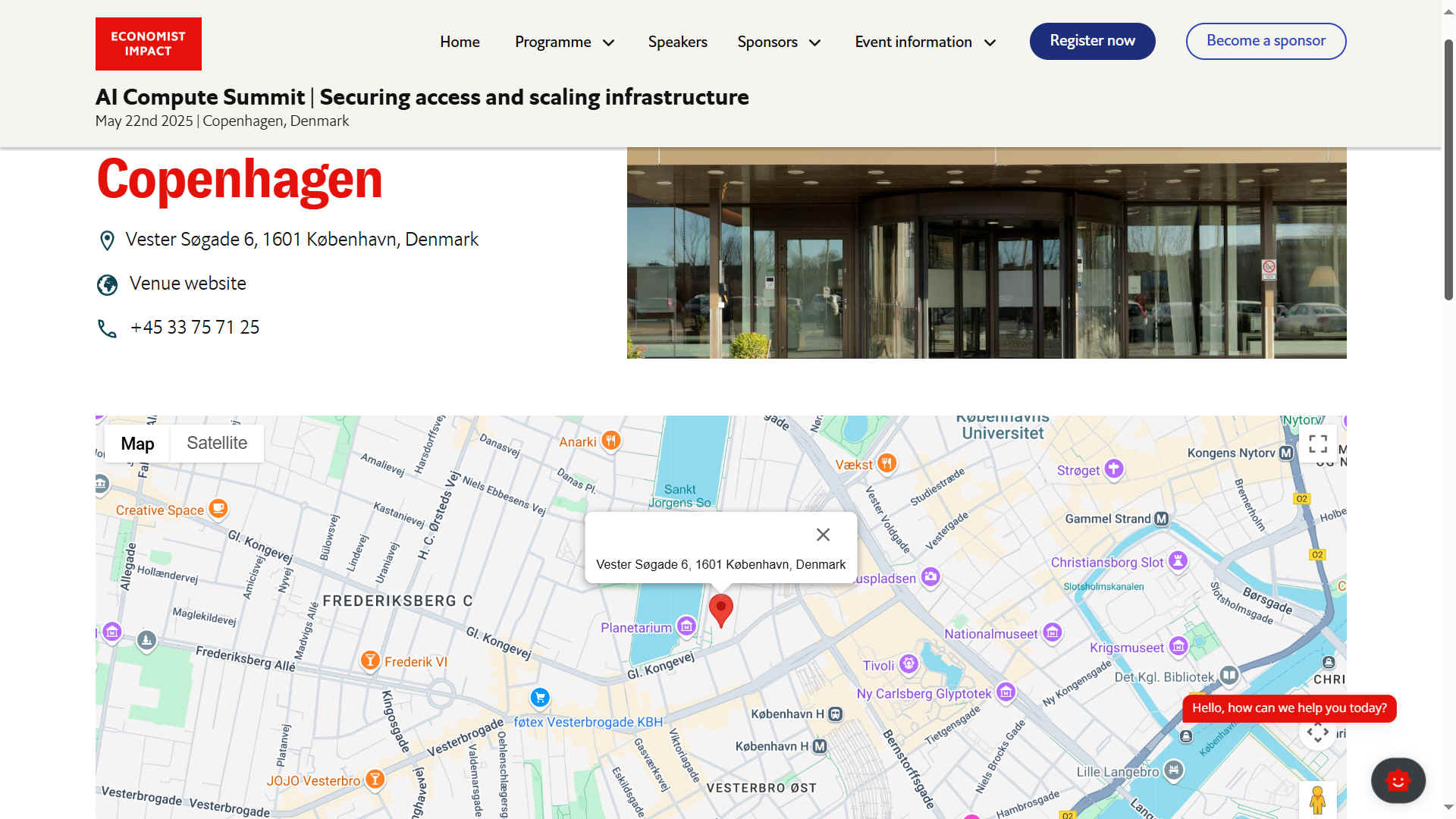

& VENUE

Scandic Copenhagen

Vester Søgade 6, 1601 København, Denmark

Venue website

+45 33 75 71 25

Please email the Economist at events@economist.com for details. If you would like to join as a speaker for May 2025, email Caitlin at caitlinmehta@economist.com

In

our Cleopatra Reborn adventure, scientists develop a way to

clone and replicate humans from their DNA. Cloning is an

accepted method of duplicating animals. Replication is a

theoretical advance on cloning, but entirely possible. The

'New World' scientists also find a

way to enhance human performance, to include eradicating

faulty cancer genes, using a CRISPR virus delivery system.

Later, in another adventure, Dan Hawk and John Storm cure a

fictional Pope of stage 4 cancer.

CORPORATIONS

USING AI

There

are many companies around the world, now incorporating AI in

their products. We explore some potential sectors and

companies on the cutting edge of AI innovation.

Technology Companies: Giants like Microsoft, Google, and IBM are heavily invested in AI research and development.

Companies specializing in AI software, machine learning, and neural networks. This includes companies developing advanced data analysis tools,

brain simulation software, and digital-to-biological interfaces.

Automotive Industry: Companies like Tesla,

BMW, and

Ford are integrating AI into their vehicles for

autonomous driving and smart features.

Healthcare and Life Sciences: Firms like Johnson & Johnson, Pfizer, and Medtronic are exploring AI for drug discovery, diagnostics, and personalized medicine.

Biotechnology, Healthcare Companies and Genetic Engineering Firms: Companies involved in gene editing, cloning, regenerative medicine and biological replication.

Pharmaceutical Companies: Those developing advanced medical treatments or breakthroughs in

cancer research where AI is used in processes.

Medical Imaging and Diagnostic Companies: Companies that develop advanced medical imaging technologies (e.g., MRI, CT scans) related to health and well-being.

Consumer Electronics: Brands like Apple, Hewlet Packard, Samsung, and

Sony are constantly pushing the boundaries of AI in their devices.

Cybersecurity Firms: With advanced AI comes the need for robust cybersecurity. Companies specializing in data protection, encryption, and anti-hacking software would be relevant.

Smartphone and Device Manufacturers: In a world where AI is prevalent, smartphones and other devices would play a crucial role.

Robotics Companies: Makers of robotic assistants or other AI-powered devices.

Data Center and Cloud Computing Providers: The vast amounts of data required for AI processing would necessitate powerful data centers and cloud computing infrastructure.

Financial Services: Companies like J P Morgan Chase, Goldman Sachs, and PayPal are using AI for fraud detection, customer service, and investment strategies.

Retail and E-commerce: Amazon, Alibaba, and Walmart are leveraging AI for personalized shopping experiences, inventory management, and

logistics.

Entertainment and Media: Streaming services like Netflix,

Disney+, and

Amazon Prime Video use AI for content recommendations and production.

Energy and Utilities: Companies like Siemens, General Electric, and Shell are using AI for smart grids, energy management, and predictive maintenance.

Telecommunications: Firms like AT&T, Verizon, and Vodafone are integrating AI for network optimization, customer service, and 5G technologies.

Defense and Aerospace: Companies like Lockheed Martin, Boeing, and Northrop Grumman are exploring AI for defense systems, drones, and space exploration.

AI could revolutionize warfare, analyzing the enemy and

planning a strategic scenario from start to finish. As in

the fictional John

Storm adventure: Cyber

WW3III, where multiple hacks on nuclear missile arsenals

in an attempt by terrorists to start World

War Three, are repelled by AI in the form of a super

nano-computer called CyberCore

Genetica™ developed by William

Bates at NanoCom

Corporation.

What

seemed to be improbable and was just science fiction, is

rapidly becoming science fact. Witness, the move from

conventional warfare with tanks and missiles, to drones, as

semi-intelligent low cost delivery systems, now with

enhanced ranges. The Ukraine

making the running in a David and Goliath standoff, with Russia.

These sectors are actively investing in AI, where they see value in

using artificial intelligence to enhance their products and technologies

and drive their 21st century solutions and features to

market in a competitive commercial world.

MEDIA

INDEX

BBC

NEWS - NETFLIX CLEOPATRA DOCUDRAMA 10 MAY 2023

BRISBANE

TIMES - RADAR REVEALS POSSIBLE LOCATION CLEOPATRA'S TOMB 2009

BRITISH

MUSEUM - CLEOPATRA,

17 YEAR OLD DAUGHTER OF CANDACE, THEBES

CBC

- CLEOPATRA OUTSMARTED EVERYONE, FEBRUARY 2021

CBS

- WHY

SOME EGYPTIANS ARE FUMING OVER NETFLIX'S BLACK CLEOPATRA

DAILY

MAIL - ADELE JAMES BREAK SILENCE AS TO BLACKWASHING CLAIMS MAY 2023

DAILY

MAIL - DEC

9 2024 KATHLENE MARTINEZ SEARCHING 20 YEARS MAKE SIGNIFICANT

FIND

DISCOVERY

MAGAZINE - JUNE

7 2023, WILL WE EVER FIND THE TOMB OF CLEOPATRA?

GREEK

CITY TIMES - ARCHAEOLOGIST CLAIMS TO BE CLOSE TO DISCOVERY OF CLEOPATRA

2021

LIVESCIENCE

- WHERE IS CLEOPATRA'S TOMB/PALACE JULY 2020

NATURE

- STUDY

90 MUMMIES REVEALS ANCESTRY ANCIENT EGYPTIANS: MIDDLE EASTERN JUNE

2017

ROTTEN

TOMATOES - CLEOPATRA

CRITICS, SERIES 1 REVIEWS MAY 2023

SKY

HISTORY - THE

HIDDEN TOMB OF CLEOPATRA MARCH 2023

SKYE

NEVILLE - PLASTIC FREE COMICS, WAITROSE BANS

SMITHSONIAN

- MORE

THAN HISTORIC SEDUCTIONS, REHABILITATED ELIZABETH TAYLOR, HOLLYWOOD ICON:

2010

SPECTATOR

- THE

TROUBLE WITH NETFLIX'S QUEEN CLEOPATRA 29 MAY 2023

THE

CONVERSATION - WHY

THE DISCOVERY OF CLEOPATRA'S TOMB COULD REWRITE HISTORY 2022

THE

GUARDIAN - NETFLIX, NO NEED FOR WHITE ACTOR 10 MAY 2023

THE

SUN - QUEEN CLEOPATRA'S TOMB, TAPOSIRIS MAGNA 2020

THE

SUN - THE REICH STUFF: GLOBAL HUNT FOR HITLER'S LOST £20 BILLION NAZI GOLD HORDE APRIL 2021

UNDERWATER

PHOTOGRAPHY GUIDE - CLEOPATRA'S SUNKEN PALACE

USA

TODAY - MUSTAFA

WAZIRI, ALEXANDRIA, EGYPTIAN ARCHAEOLOGISTS 2018

YOUTUBERS

- MAKEUP,

CLEOPATRA'S FACE & EYE COSMETICS, MUMMY MASK

YOUTUBERS

- NETFLIX VIDEO COMMENTS MAY 2023

ZAHI

HAWASS - DOCUMENTARY: CLEOPATRA VII PHILOPATOR

https://www.economistgroup.com/

https://events.economist.com/ai-compute/

https://www.energyconnects.com/opinion/2025/march/dr-sultan-al-jaber-positive-energy-and-pragmatic-actions-needed-to-drive-global-growth-and-rise-of-ai/

https://events.economist.com/ai-compute/

https://www.economistgroup.com/

https://events.economist.com/ai-compute/speakers/

https://events.economist.com/ai-compute/speakers/

|